153'600 Extra Pixels on the Framework 13

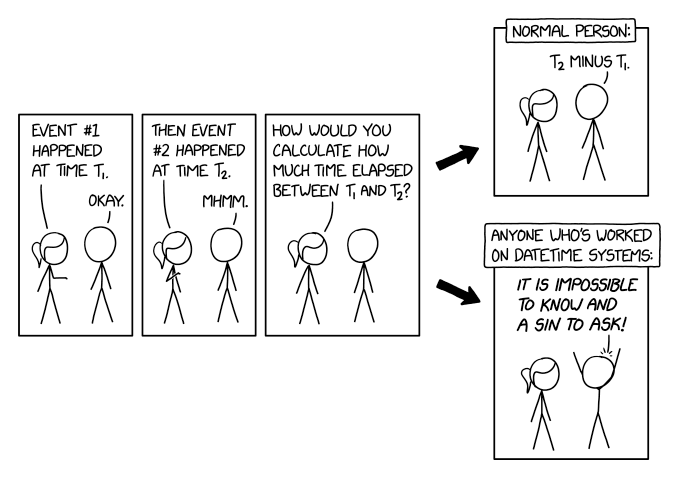

Wasted screen real estate: With the non-maximal built-in modes of the framework, you get black bars at the top and bottom of the screen. Here is how this comes about and how it can be fixed.

One thing I both like and hate about my still-new Framework 13 is the screen. The 3:2 aspect ratio is a very positive step back over the 16:9 screens I've been living with on my two notebooks of the past 20 years. You see, for most things you want to do with a computer other than watching movies, widescreen just does not work too well. 3:2 (or 0.67), on the other hand, is quite close to the ideal document aspect ratio of 0.69 (1:1.44, as exhibited by our beloved DIN A paper formats[1]).

Too many pixels

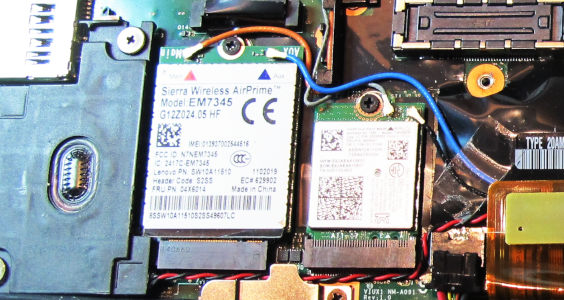

But then the screen has an awfully high native resolution of 2256x1504. This does not mix well with conventional screens that have about 90 dpi, and I want to drive these from this box, too. It also does not work well with the many programs I still want to run that are pixel-based (window maker dockapps, mostly).

What I ended up doing is lower the resolution to 1920x1200 (which, with about 6 pixels per millimeter or 150 dpi, is still plenty on a colour screen that is less than 20 cm in height) and setting:

Xft.dpi: 130

on the built-in screen while having Xft.dpi: 92 on the external screens (using strategic xrdb calls when setting these displays up). That's not ideal, but workable.

To actually lower the resolution, I had:

xrandr -s 1920x1200

quite high up in my ~/.xinitrc (which I use to set up my X session; I suspect in a less exotic setup, you could place stuff like this into /etc/X11/Xsession.d/10-local – but I didn't actually try that).

Making up Modes

Why did I chooose 1920x1200 with an aspect ratio of 8:5, when the screen has 3:2, you ask? Well, don't ask me. Ask whoever provided the built-in modes of the video card. 1920x1200 was in that list, the 3:2 mode 1920x1280 was not.

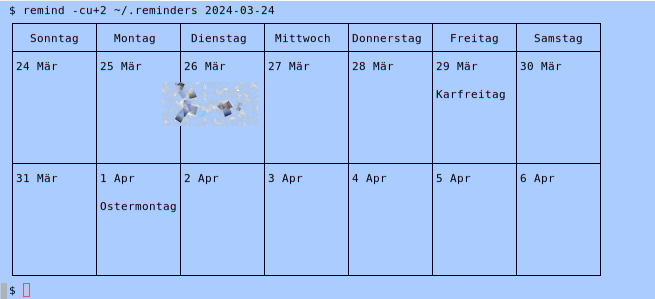

The result is, not surprisingly, black bars of 40 lines each at the top and bottom of the screen. What a deplorable waste of screen real estate! What a scandalous and needless deviation from the ideal aspect ratio of 144:100!

All indignation aside, I lived with that for a while, until I remembered that one can make up video modes, and sometimes these actually work. So, can I do the missing 3:2 mode with a horizontal resolution of 1920 pixels? Actually, cvt makes that fairly simple:

$ cvt 1920 1280 60 # 1920x1280 59.96 Hz (CVT) hsync: 79.57 kHz; pclk: 206.25 MHz Modeline "1920x1280_60.00" 206.25 1920 2056 2256 2592 1280 1283 1293 1327 -hsync +vsync

This needs a tiny bit of massaging before it is suitable for xrandr and hence a shell script. So, in my .xinitrc, there is now this to make the black bars vanish:

xrandr --newmode "1920x1280" 206.25 1920 2056 2256 2592 1280 1283 1293 1327 xrandr --addmode eDP-1 "1920x1280" xrandr -s 1920x1280

| [1] | Actually, the German Wikipedia on its page on DIN formats claims that octavo sheets used to have an aspect ratio of 2:3, which was considered more serious than the “softer“ quart at 3:4. The main point being: nobody ever considered paper with an aspect ratio of 16:9. |

![[RSS]](../theme/image/rss.png)