Glücksspielwerbung mit dem Finanzministerium

Ich will gerne gestehen, dass ich die Zeitschriften der GEW, im Fall der Bundesorganisation die Erziehung und Wissenschaft, nicht immer mit großer Neugier aus dem Briefkasten ziehe und gleich verschlinge. Aber irgendwann liegen sie doch oben auf dem Lesestapel, und so bin ich vorhin in der Ausgabe 10/24 auf ein Inserat des Bundesministeriums für Finanzen (BMF)[1] gestoßen:

„Mit Geld und Verstand“, „Festival für Finanzbildung“ gar – wen würde da nicht die Sorge beschleichen, dass Finanz- und Wissenschaftsministerium hier mit öffentlichen Mitteln versuchen, die Hirne von LehrerInnen mit Werbung für den ganzen Reichwerde-, Geldspiel-, Multilevel-Marketing- und Konkurrenzquatsch zu vergiften?

Multi-Level Murks

Wer sich mit Nerdgejammer nicht aufhalten will, kann bei Endlich: Die Konferenz! weiterlesen. Aber der Webmurks des Ministeriums ist durchaus auch für Muggels unterhaltsam, glaube ich.

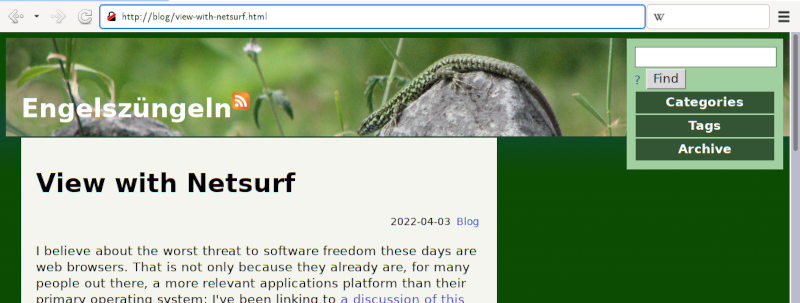

Ich wollte mich von der Propagandadichte selbst überzeugen und habe also :o http://mitgeldundverstand.de/festival in meinen Browser getippt; das sollte im Luakit zur entsprechenden Webseite führen.

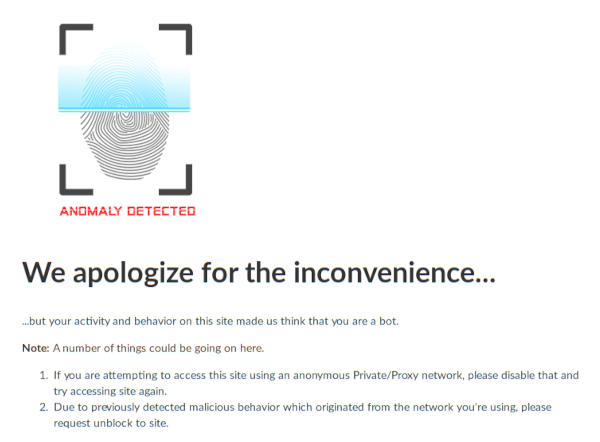

Aber Pustekuchen. Ohne Javascript, bei der aktuellen Mondphase, mit meinem User-Agent-String („Tracking is lame“) oder warum auch immer bin ich stattdessen auf einer Seite auf dem Server validate.perfdrive.com herausgekommen:

Lasst mich das kurz ins Deutsche übersetzen: Ein Bot hat beschlossen, dass ich ein Bot bin, und daher lande ich, statt auf einer Seite des Ministeriums von Christian Lindner auf einem noch deutlich zweifelhafteren Webangebot unbekannter Provenienz.

Ich musss ehrlich sagen, dass mir ja schon die Fantasie fehlt, wofür das BMF – zumal bei statischen Webseiten, die, so nicht ganz blöde gemacht, ohnehin schon schwer zu DoSsen sind – überhaupt einen Botschutz braucht. Wird sich wirklich wer die Mühe machen, diese öden Seiten durch Überlastung in die Knie zu zwingen („zu DoSsen“)?

Perfdrive für was genau?

Und wenn sich da wirklich wer erbarmen würde und den BMF-WebmasterInnen 15 Minuten des Ruhms bescheren: Wo wäre das Problem, wenn diese Person ein paar Tage ihr Mütchen kühlte? Angesichts von Galionsfiguren wie dem ja nicht sonderlich sympathietragenden Finanzminister möchte ich gar nicht versuchen, komplettes Unverständnis für die Motivation dieser Person zu heucheln.

Ja, was wäre nach ein paar Tagen Web-Absenz des BMF aufgrund so eines hypothetischen DoS-Angriffs? Das Zeug, das da steht, wird danach weder merkbar schlechter noch merkbar besser sein als heute. Da verpasst niemand KundInnen, und die Pressearbeit fürs BMF machen Spiegel und INSM gewiss besser als das eigene Haus. Also wozu dieser „Botschutz“?

Perfdrive.com übrigens, der „Dienstleister“, auf den das BMF zum „Botschutz” setzt, leistet sich keine Maschine, die auf www.perfdrive.com horcht, was wenigstens mir obendrein nicht viel Vertrauen einflößt in deren Fähigkeiten, einen DoS „abzuwehren“. Es gibt auf der von validate.perfdrive.com ausgelieferten Seite zudem keine Datenschutzaufklärung oder irgendwelche anderen Hinweise, mit wem mensch da eigentlich redet. Lediglich ein google-Captcha taucht auf, wenn mensch denn Javascript erlaubt.

Mit whois ist immerhin herauszubekommen, dass der Laden, der das Zeug betreibt, in Tempe, Arizona sitzt. Dass ein Bundesministerium meine personenbezogenen Daten (jedenfalls mal meine IP und den kompromittierenden Umstand, dass ich mich für seinen Unfug interessiere) nonchalant von einem US-Unternehmen verarbeiten lässt, entspricht allen Lindner-Klischees („Bedenken second“).

Selbstironische Cookiebanner

Ich will aber gerecht sein: Die Maschine, bei der jedenfalls meine Pakete gelandet sind, wird – so interpretiere ich die Traceroute-Ausgabe, die bei einer Maschine mit Twelve99-Namen endet – von dem betrieben, was einstmals Telia war (eine halbwegs legendäre schwedische Telekommunikations-Firma) und könnte somit potenziell in der EU (in Schweden?) stehen. Aber richtig überzeugt bin ich nicht: von Frankfurt bis zum nächsten Router auf dem Weg zum von perfdrive gemieteten Server sind meine Pakete 80 ms gelaufen, also bis zu 24'000 km weit. Die ganze Bot-Diagnose könnte also eigentlich überall auf der Welt stattgefunden haben, inklusive Frankfurt oder Schweden, denn Zeit in der Größenordnung von 80 Millisekunden kann in Elektronik immer mal auch ohne Lichtlaufzeit vergehen.

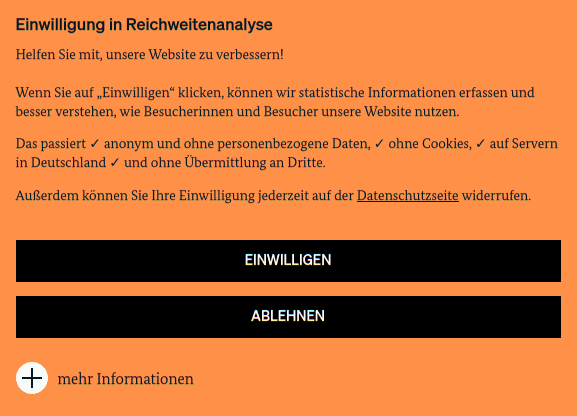

Mit einem Firefox statt eines Luakits beschließt perfdrive derzeit großzügig, ich sei kein Bot und liefert die ministerielle Webseite aus, ohne dass ich mir den Segen von Google via captcha erarbeiten müsste. Nerviger- aber nicht wirklich überraschenderweise konfrontiert die ihre BesucherInnen zunächst mit einem besonders offensichtlich überflüssigen Cookiebanner, in dem schon drinsteht, dass sie keine Cookies verwenden und keine personenbezogenen Daten verarbeiten:

Mal die grundsätzliche Frage beiseite, wofür das Ministerium die paar Leute, die sich seinen Mist antun, überhaupt tracken will – hätte sich das zuständige Personal nicht zurückhalten können, wenn sie (nach eigenem Verständnis) überhaupt keine personenbezogenen Daten verarbeiten? Was haben sie sich dabei gedacht, trotzdem eines zusammenzufantasieren? „Cookiebanner sind best practice in der ganzen Industrie!“ oder wie?

Dennoch würde ich die Leute, die sich diese (unfreiwillige?) Persiflage eines Cookiebanners ausgedacht haben, gerne fragen: Wenn es doch gar nicht meine personenbezogenen Daten sind, wie kann ich dann überhaupt in ihre Verarbeitung einwilligen?

Endlich: Die Konferenz!

Nach all diesem überflüssigen Ärger konnte ich doch einen Blick auf die Konferenz des BMF werfen. Allerdings bin ich Wissenschafts-sozialisiert, was hier heißt, dass ich, noch bevor ich ansehe, was verhandelt wird, ansehe, wer da verhandelt[2]. Daher habe ich mich zähneknirschend durch die lästige Paginierung der RednerInnen-Seite geklickt und die 118 ReferentInnen des „Festivals“ in Klassen einsortiert, um eine Einschätzung zu bekommen, womit die LehrerInnen dort wohl betankt werden sollten. Das Ergebnis vorweg:

| Klasse der Herkunftseinrichtung | # |

|---|---|

| Öffentliche Banken | 10 |

| Private Banken | 4 |

| Andere Privatunternehmen | 11 |

| Beratung und reaktionäre „Wissenschaft“ | 55 |

| Zivilgesellschaft | 20 |

| Exekutive | 9 |

| Verzweifelte | 7 |

| Schule | 2 |

Beratungsunternehmen und reaktionäre Wissenschaft (also Leute von Wirtschaftsfakultäten und einschlägigen außeruniversitären Instituten) konnte ich gelegentlich nicht hinreichend scharf trennen, und weil sie eh das Gleiche erzählen, fand ich ihre Unterscheidung auch nicht wichtig.

Unter „Verzweifelte“ habe ich Menschen rubriziert, die mir panisch prekär erschienen und die wirkten, als seien ihre Geschichten von Austerität und Erfolg im Wettbewerb eine Art Versuch, den eigenen Abstieg ins materielle Elend durch entschlossenes Stellungnehmen auf der Seite der Reichen zu negieren oder gar aufzuhalten.

96:22 gegen die Faulheit

Wir haben also 22 VertreterInnen (Zivilgesellschaft und Schule), denen ich zutrauen würde, im Interesse lebendiger Menschen zu argumentieren, während die übrigen 96 gewiss das Hohelied des Aktien-, Fonds-, Derivate- und Rohstoffhandels durch kleine Leute gesungen haben, auf dass die Lehrkräfte die Phantasmen von der Rentier-Existenz für alle an ihre armen Schülis weitergeben. In den Vortragsräumen wird es demnach recht durchweg zugegangen sein wie in Thomas Kehls Vortrag, dessen Abstract bereits die ganze Verwirrung einfängt:

Klassische Zinsanlagen wie Sparbücher reichen jedoch nicht aus, um der Inflation entgegenzuwirken. Es ist wichtig, die Kontrolle über die eigenen Finanzen zu übernehmen und sich mit Anlageklassen wie Aktien-ETFs [ich musste die Abkürzung auch erstmal expandieren lassen: das sind börsennotierte, also auch durch KleinsparerInnen gefüllte Systemlotto^H^H^H^H^H-Geldanlagen] auseinanderzusetzen, um die Altersvorsorge abzusichern.

Wer die Verwirrung darin nicht sofort sieht, sei auf die Ursprüngliche Gewalt im Faulheits-Post verwiesen.

Übrigens wird das Verhältnis 22:96 gegen menschenfreundliche Inhalte dem BMF noch stark schmeicheln, denn zehn der mutmaßlich menschenfreundlichen Zweiundzwanzig kamen allein von den Verbraucherzentralen. Sie hatten alle keine „ordentlichen“ Vorträge, sondern nur einen Stand, an dem sie – vermutlich ein wenig nach Art gallischer DörflerInnen – auch ein wenig referiert haben.

Ebenfalls nicht vortragswürdig war dem BMF ein aus meiner Sicht extrem wichtiger Beitrag der Zivilgesellschaft: Informationen zur Schuldnerberatung. Ein großer Teil der KleininvestorInnen wird ja recht zwangsläufig dort enden. Doch auch bei dem Thema hat es nur für einen Stand gereicht.

Nein, bei den Vorträgen ging es eher um die Erschließung neuer, leicht auszubeutender KundInnenressourcen, womit sich der Kreis zur GEW, in deren Blatt das BMF seine Werbeveranstaltung ja anpries, schließt. Was für LehrerInnen eine besondere Herausforderung ist, ist für Finanzdienstleister eine besondere Chance:

Erfolgreiche Umsetzung von Finanzwissen [sc. um Aktien, ETFs und Warentermingeschäfte] erfordert nämlich kognitive Fähigkeiten wie Selbstregulation, die unter anderem das Steuern von Impulsen, das Kontrollieren von Emotionen und die Selbstmotivation umfasst. Besonders Menschen mit ADHS haben damit Schwierigkeiten und sind daher anfälliger für finanzielle Probleme.

Um Finanzbildungsangebote noch wirksamer zu gestalten und unserer Verantwortung für den Transfer von Erkenntnissen in den Alltag gerecht zu werden, sollten wir die Herausforderungen von Menschen mit ADHS genauer betrachten. Der Workshop gibt Einblicke in zentrale Mechanismen der Selbstregulation und sensibilisiert für unsichtbare Hürden, die die Nutzung von Finanzwissen auch für Menschen ohne ADHS erschweren können.

Keine weiteren Fragen, euer Ehren. Mein Plädoyer: Schon der Plan, normale Menschen zur Zeitverschwendung mit „Finanzprodukten“ zu verführen, sollte zur Verurteilung des fiesen „Festivals“ des BMF reichen. Ob speziell in der eben zitierten Passage besonders verletzliche Gruppen gezielt angesprochen werden sollen, mag danach dahingestellt bleiben.

Kommentar 1 am 2024-10-28

In Kooperation mit #Attac Deutschland hat die Otto Brenner Stiftung den Erziehungswissenschaftler Professor Thomas #Höhne (Helmut-Schmidt-Universität Hamburg) beauftragt, die Initiative der Bundesregierung zu untersuchen. Sein Fazit: Die Initiative wird dem Anspruch, unabhängige #finanzielleBildung zu fördern, nicht gerecht. Das eigentliche Anliegen der #FDP-dominierten Initiative scheint vielmehr ein parteipolitisches zu sein https://www.attac.de/presse/detailansicht/news/finanzbildung-mit-parteipolitischer-agenda-studie-kritisiert-die-einseitigkeit-der-finanzbildungsinitiative-der-bundesregierung

| [1] | Ok: das BMBF (also das Bundesministerium für Bildung und Forschung) hat auch noch unterschrieben, aber mein zwingender Eindruck ist, dass das mehr aus Parteiloyalität passiert ist als aus irgendeiner inhaltlichen Affinität. |

| [2] | Ich will hier kein … |

![[RSS]](../theme/image/rss.png)