Feedback and Addenda in Pelican Posts

Blog comments may be dead out there; here, I'd like to at least pretend they're still alive, and thus I've written a pelican plugin to properly mark them up.

When I added a feedback form to this site about a year ago, I also created a small ReStructuredText (RST) extension for putting feedback items into the files I feed to my blog engine Pelican. The extension has been sitting in my pelican plugins repo on codeberg since then, but because there has not been a lot of feedback on either it or the posts here (sigh!), that was about it.

But occasionally a few interesting (or at least provocative) pieces of feedback did come in, and I thought it's a pity that basically nobody will notice them[1] or, (cough) much worse, my witty replies.

At the same time, I had quite a few addenda to older articles, and I felt some proper markup for them (plus better chances for people to notice they're there) would be nice. After a bit of consideration, I figured the use cases are similar enough, and I started extending the feedback plugin to cover addenda, too. So, you can pull the updated plugin from codeberg now. People running it on their sites would certainly be encouragement to add it to the upstream's plugin collection (after some polishing, that is).

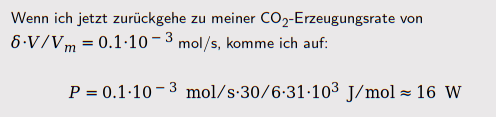

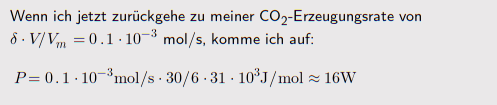

Usage is simple – after copying the file to your plugins folder and adding "rstfeedback" to PLUGINS in pelicanconf.py, you write:

.. feedback::

:author: someone or other

:date: 2022-03-07

Example, yadda.

for some feedback you got (you can nest these for replies) or:

.. addition:: :date: 2022-03-07 Example, yadda.

for some addition you want to make to an article; always put in a date in ISO format.

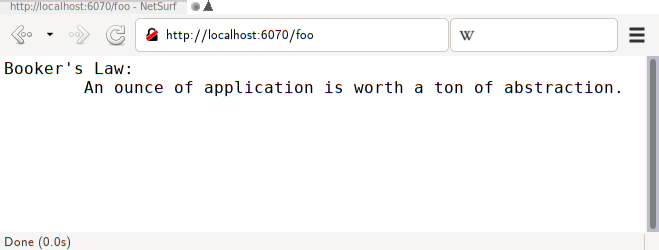

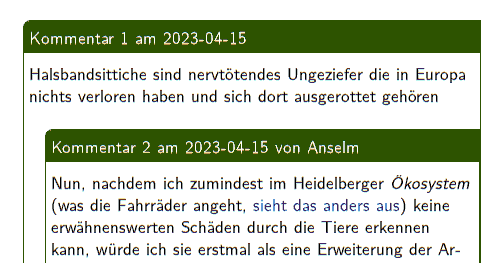

In both cases a structured div element is generated in the HTML, which you can style in some way; the module comment shows how to get what's shown in the opening figure.

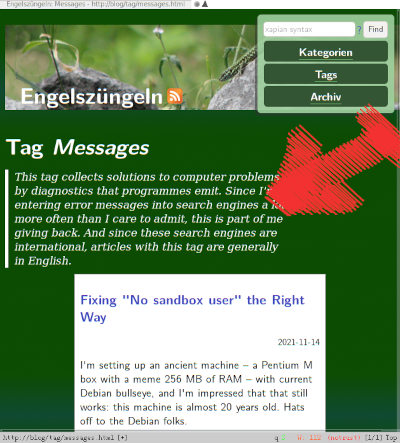

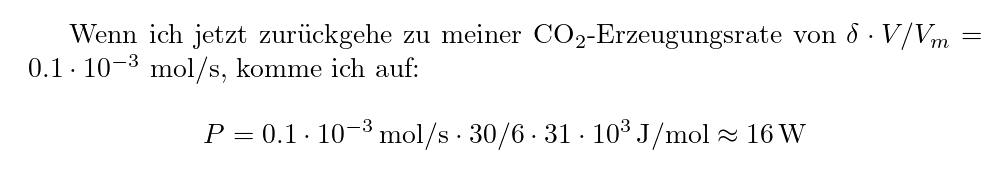

The extension also adds a template variable LAST_FEEDBACK_ITEMS containing a list of the last ten changes to old posts. Each item is an instance of some ad-hoc class with attributes url, kind (feedback or addendum), the article title, and the date. On this blog, I'm currently formatting it like this in my base template:

<h2>Letzte Ergänzungen</h2>

<ul class="feedback">

{% for feedback_item in LAST_FEEDBACK_ITEMS %}

<li><a href="{{ SITEURL }}/{{ feedback_item.url }}">{{ feedback_item.kind }} zu „{{ feedback_item.title }}“</a> ({{ feedback_item.date }})</li>

{% endfor %}

</ul>

As of this post, this block is at the very bottom of the page, but I plan to give it a more prominent place at least on wide displays real soon now. Let's see when I feel like a bit of CSS hackery.

Caveats

First of all, I have not localised the plugin, and for now it generates German strings for “Kommentar” (comment), “Nachtrag” (addendum) and “am” (on). This is relatively easy to fix, in particular because I can tell an article's language from within the extension from the article metadata. True, that doesn't help for infrastructure pages, but then these won't have additions anyway. If this found a single additional user, I'd happily put in support for your preferred language(s) – I should really be doing English for this one.

This will only work with pages written in ReStructuredText; no markdown here, sorry. Since in my book RST is so much nicer and better defined than markdown and at the same time so easy to learn, I can't really see much of a reason to put in the extra effort. Also, legacy markdown content can be converted to RST using pandoc reasonably well.

If you don't give a slug in your article's metadata, the plugin uses the post's title to generate a slug like pelican itself does by default. If you changed that default, the links in the LAST_FEEDBACK_ITEMS will be wrong. This is probably easy to fix, but I'd have to read a bit more of pelican's code to do it.

I suppose the number of recent items – now hardcoded to be 10 – should become a configuration variable, which again ought to be easy to do. A more interesting (but also more involved) additional feature could be to have per-year (say) collections of such additions. Let's say I'm thinking about it.

Oh, and error handling sucks. That would actually be the first thing I'd tackle if other people took the rstfeedback plugin up. So… If you'd like to have these or similar things in your Pelican – don't hesitate to use the feedback form (or even better your mail client) to make me add some finish to the code.

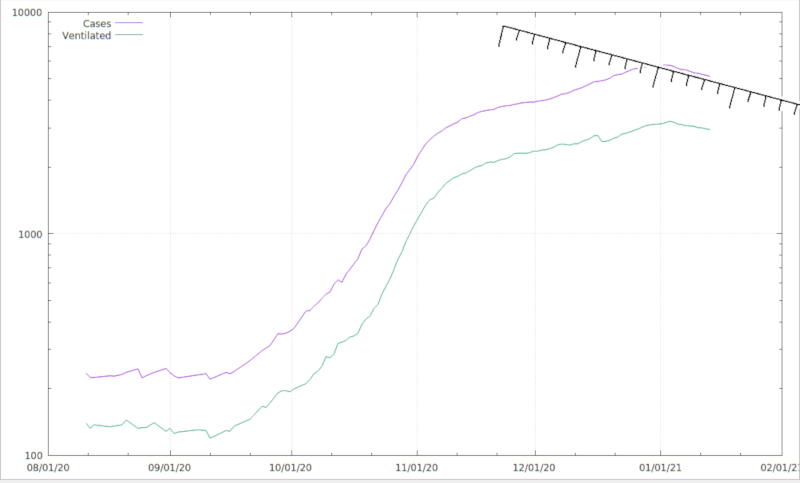

| [1] | I made nginx write logs (without IP addresses, of course) for a while recently, and the result was that there's about a dozen human visitors a day here, mostly looking at rather recent articles, and so chances are really low anyone will ever see comments on old articles without some extra exposure. |

![[RSS]](../theme/image/rss.png)