How to Block a USB Port on Smart Hubs in Linux

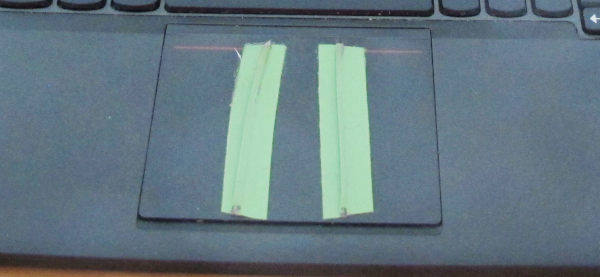

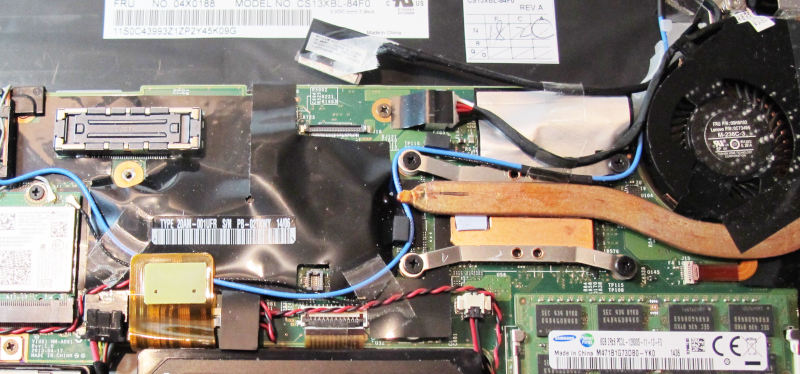

Somewhere beneath the fan on the right edge of this image there is breakage. This post is about how to limit the damage in software until I find the leisure to dig deeper into this pile of hitech.

My machine (a Lenovo X240) has a smart card reader built in, attached to its internal USB. I don't need that device, but until a while ago it did not really hurt either. Yes, it may draw a bit of power, but I'd be surprised if that were more than a few milliwatts or, equivalently, one level of screen backlight brightness; at that level, not even I will bother.

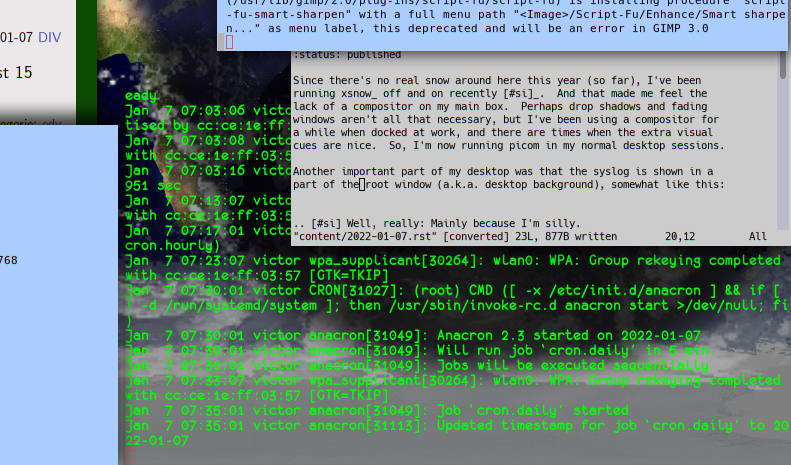

However, two weeks ago the thing started to become flaky, presumably because the connecting cable is starting to rot. The symptom is that the USB stack regularly re-registers the device, spewing a lot of characters into the syslog, like this:

Aug 20 20:31:51 kernel: usb 1-1.5: USB disconnect, device number 72 Aug 20 20:31:51 kernel: usb 1-1.5: new full-speed USB device number 73 using ehci-pci Aug 20 20:31:52 kernel: usb 1-1.5: device not accepting address 73, error -32 Aug 20 20:31:52 kernel: usb 1-1.5: new full-speed USB device number 74 using ehci-pci Aug 20 20:31:52 kernel: usb 1-1.5: New USB device found, idVendor=058f, idProduct=9540, bcdDevice= 1.20 Aug 20 20:31:52 kernel: usb 1-1.5: New USB device strings: Mfr=1, Product=2, SerialNumber=0 Aug 20 20:31:52 kernel: usb 1-1.5: Product: EMV Smartcard Reader Aug 20 20:31:52 kernel: usb 1-1.5: Manufacturer: Generic Aug 20 20:31:53 kernel: usb 1-1.5: USB disconnect, device number 74 Aug 20 20:31:53 kernel: usb 1-1.5: new full-speed USB device number 75 using ehci-pci [as before] Aug 20 20:32:01 kernel: usb 1-1.5: new full-speed USB device number 76 using ehci-pci Aug 20 20:32:01 kernel: usb 1-1.5: New USB device found, idVendor=058f, idProduct=9540, bcdDevice= 1.20 Aug 20 20:32:01 kernel: usb 1-1.5: New USB device strings: Mfr=1, Product=2, SerialNumber=0 [as before] Aug 20 20:32:02 kernel: usb 1-1.5: USB disconnect, device number 76

And that's coming back sometimes after a few seconds, sometimes after a few 10s of minutes. Noise in the syslog is never a good thing (even when you don't scroll syslog on the desktop), as it will one day obscure something one really needs to see, and given that device registrations involve quite a bit of computation, this also is likely to become relevant power-wise. In short: this has to stop.

One could just remove the device physically or at least unplug it. Unfortunately, in this case that is major surgery, which in particular would involve the removal of the CPU heat sink. For that I really want to replace the thermal grease, and I have not been to a shop that sells that kind of thing for a while. So: software to the rescue.

With suitable hubs – the X240's internal hub with the smart card reader is one of them – the tiny utility uhubctl lets one cut power to individual ports. Uhubctl regrettably is not packaged yet; you hence have to build it yourself. I'd do it like this:

sudo apt install git build-essential libusb-dev git clone https://github.com/mvp/uhubctl cd uhubctl prefix=/usr/local/ make sudo env prefix=/usr/local make install

After that, you have a program /usr/local/sbin/uhubctl that you can run (as root or through sudo, as it needs elevated permissions) and that then tells you which of the USB hubs on your system support power switching, and it will also tell you about devices connected. In my case, that looks like this:

$ sudo /usr/local/sbin/uhubctl Current status for hub 1-1 [8087:8000, USB 2.00, 8 ports, ppps] Port 1: 0100 power [...] Port 5: 0107 power suspend enable connect [058f:9540 Generic EMV Smartcard Reader] [...]

This not only tells me the thing can switch off power, it also tells me the flaky device sits on port 5 on the hub 1-1 (careful inspection of the log lines above will reconfirm this finding). To disable it (that is, power it down), I can run:

$ sudo /usr/local/sbin/uhubctl -a 0 -l 1-1 -p 5

(read uhubctl --help if you don't take my word for it).

Unfortunately, we are not done yet. The trouble is that the device will wake up the next time anyone touches anything in the wider vicinity of that port, as for instance run uhubctl itself. To keep the system from trying to wake the device up, you also need to instruct the kernel to keep its hands off. For our port 5 on the hub 1-1, that's:

$ echo disabled > /sys/bus/usb/devices/1-1.5/power/wakeup

or rather, because you cannot write to that file as a normal user and I/O redirection is done by your shell and hence wouldn't be influenced by sudo:

$ echo disabled | sudo tee /sys/bus/usb/devices/1-1.5/power/wakeup

That, indeed, shuts the device up.

Until the next suspend/resume cycle that is, because these settings do not survive across one. To solve that, arrange for a script to be called after resume. That's simple if you use the excellent pm-utils. In that case, simply drop the following script into /etc/pm/sleep.d/30killreader (or so) and chmod +x the file:

#!/bin/sh

case "$1" in

resume|thaw)

echo disabled > /sys/bus/usb/devices/1-1.5/power/wakeup

/usr/local/sbin/uhubctl -a 0 -l 1-1 -p 5

;;

esac

exit 0

If you are curious what is going on here, see /usr/share/doc/pm-utils/HOWTO.hooks.gz.

However, these days it is rather unlikely that you are still leaving suspend and hibernate to pm-utils; instead, on your box this will probably be handled by systemd-logind. You could run pm-utils next to that, I suppose, if you tactfully configured the host of items with baroque names like HandleLidSwitchExternalPower in logind.conf, but, frankly, I wouldn't try that. Systemd's reputation for wanting to manage it all is not altogether undeserved.

I have tried to smuggle in my own code into logind's wakeup procedures years ago in systemd's infancy and found it hard if not impossible. I'm sure it is simpler now. If you know a good way to make logind run a script when resuming: Please let me know. I promise to amend this post for the benefit of people running systemd (which, on some suitable boxes, does include me).

![[RSS]](../theme/image/rss.png)